MagicAnimate Playground

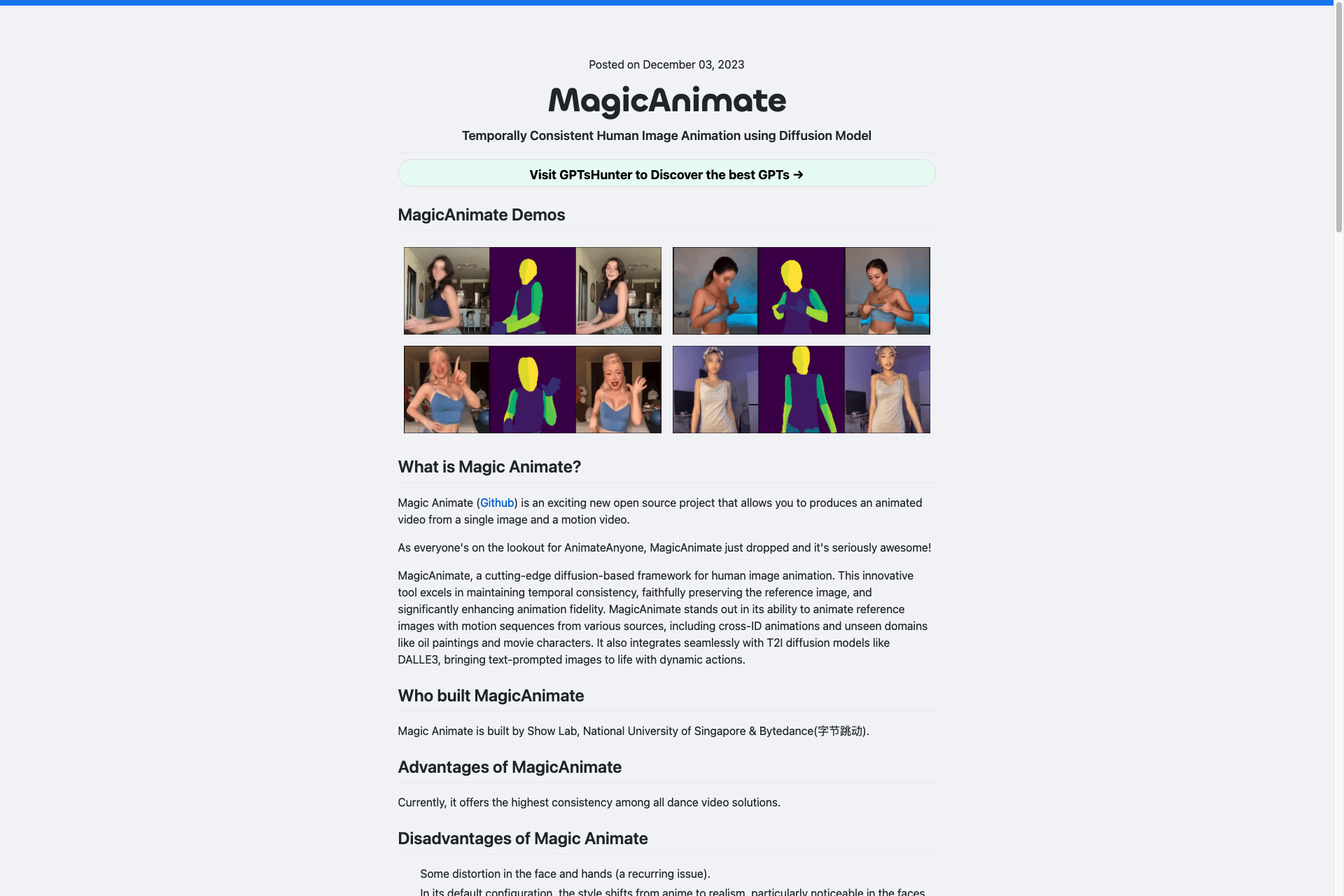

Magic Animate is a groundbreaking open-source project that simplifies animation creation, allowing you to produce animated videos from a single image and motion video. This website is designed to aggregate relevant content for easy learning and practical use.

Related Products about MagicAnimate Playground

Gen A.F is a media focused on keeping readers informed and up to date with the most important technology of today: Generative AI.

An openly accessible model that excels at language nuances, contextual understanding, and complex tasks like translation and dialogue generation.

Transform your room colors instantly and precisely with changeroomcolor.com! Powered by Spacely AI, upload your room image and change wall, floor, or ceiling colors within seconds. Easy to use with precise AI-driven results.

16x Prompt helps developers compose the perfect prompt with source code and context for ChatGPT. Add context, source code and formatting instructions to prompts easily.

Upload your short video, wait for the subtitles to automatically generate on your video, edit them as you wish, add cool emojis, build, download, then post your final video with subtitles everywhere! Boost your social video engagement with automated subtitles

Introducing the ultimate book creation assistant, transforming the process from months to mere hours. No more endless drafting – your complete book, ready at a click.

Aiconly is a platform that allows users to easily generate custom icons. Users can create icons for personal or professional use with minimal effort.