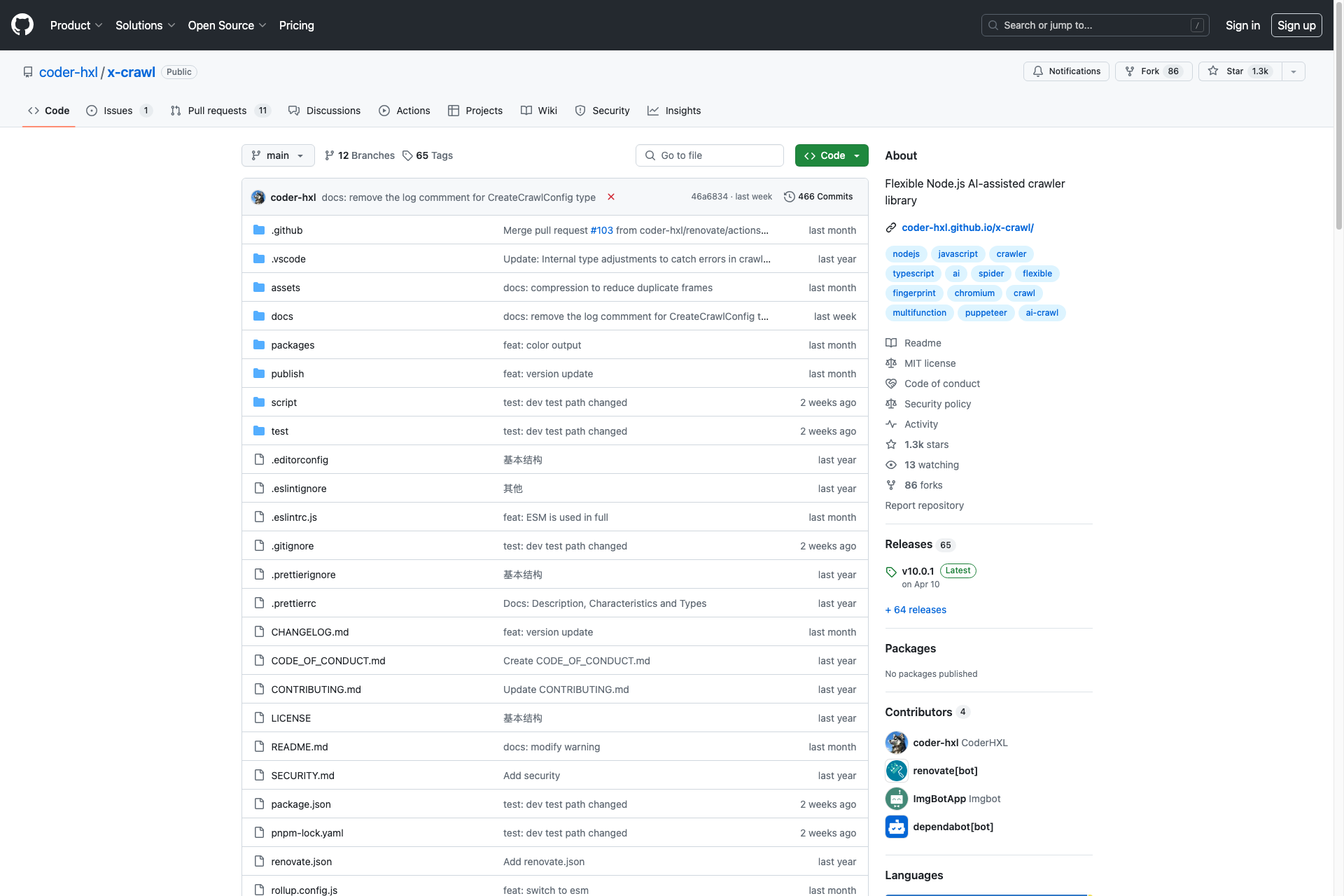

x-crawl

x-crawl is a flexible Node.js AI-assisted crawler library. Flexible usage and powerful AI assistance functions make crawler work more efficient, intelligent and convenient.

Related Products about x-crawl

Save hours of searching for the perfect image, create high-quality images in seconds, perfect for designers, marketers, and creators. Join now and get 2 credits for FREE.

Granola is an AI-powered notepad that makes taking & using meeting notes a breeze. It blends your own notes with smart transcription to capture every detail. With its simple design & advanced AI, Granola ensures your notes are not just recorded, but useful.

Explore the capabilities of Sora. Discover the latest and trending videos and prompts generated by Sora at madewithsora.com 🙌

Prepare for your interviews with our AI-powered PrepMasterAI platform. Engage in realistic mock interviews to enhance your skills, gain confidence, and ace your next interview.

AI Cover Letter Creator offers a seamless way to create personalized cover letters using AI. Provide your CV and job description, and let us do the rest.

AI Writer is your go-to guide for exploring and choosing the best AI writing tools tailored to your needs. It offers insights, comparisons, and in-depth reviews of various AI writing software to enhance your content creation process.