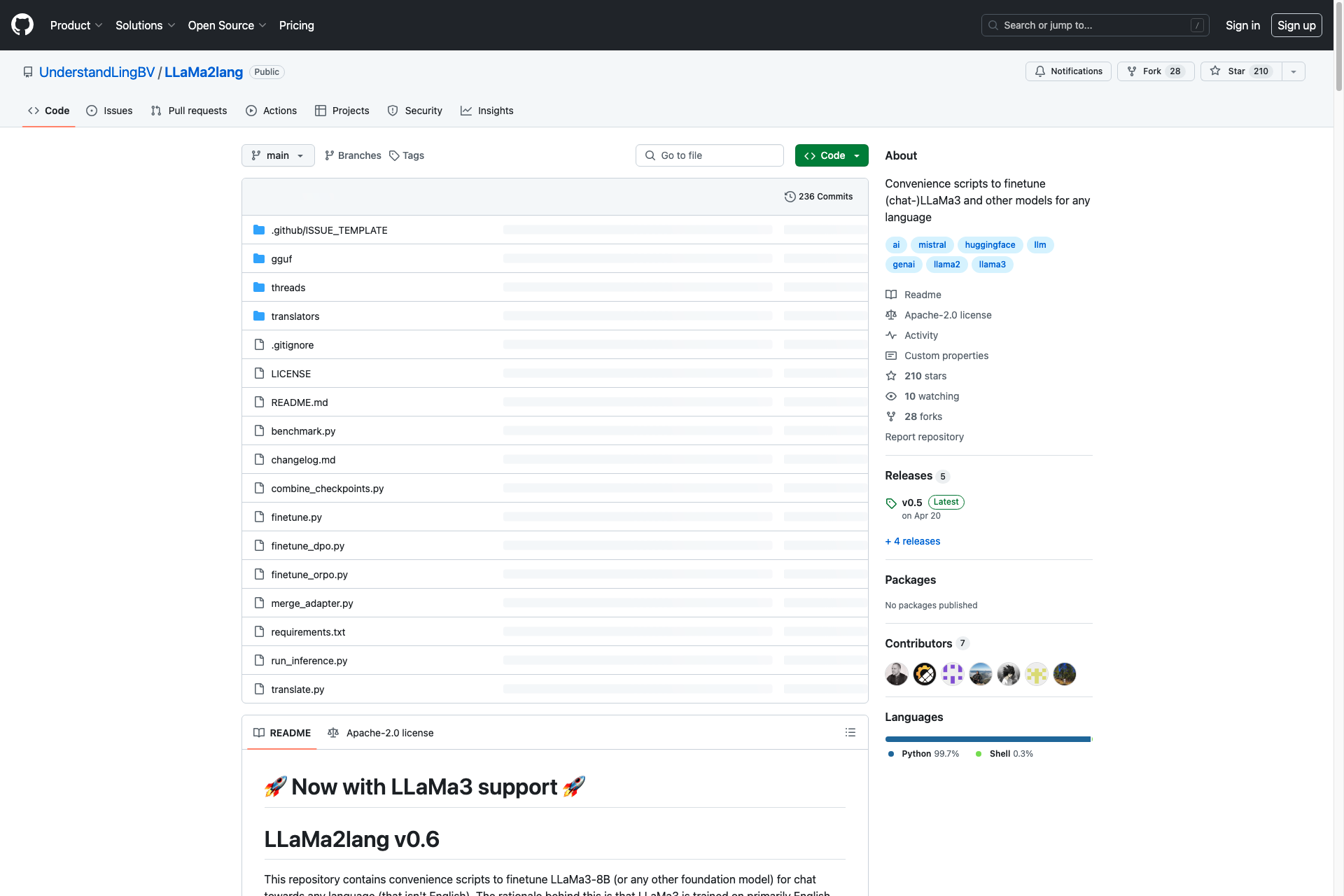

LLaMa2Lang

Finetune LLaMa2-7b for chat towards any language (that isn't English). The rationale behind this is that LLaMa2 is trained on primarily English data and while it works to some extent for other languages, its performance is poor compared to English.

Related Products about LLaMa2Lang

House of XYZ is a full-service software studio, ready to design, develop, and deploy anything you need built. Whether you’re a startup looking to innovate, an established company aiming to scale, or an entrepreneur with a vision, we got you!

Struggling with meal ideas based on what's in your fridge or while wandering the supermarket aisles? Forget complicated recipe apps. Scan My Kitchen is designed to solve a common problem: deciding what to cook with the ingredients you have or crave.

Curious about your future? AI Fortunist unlocks it with advanced AI for detailed Tarot readings, Coffee ☕ Fortune Telling, Dream Interpretation, & Free Daily AI Horoscopes. Get your FREE reading (Free Tarot Reading) upon signing up using "0fbfdc680d".

Serpgram, an AI-driven SEO tool, boosts organic traffic with zero ad spend. It offers high-traffic, low-competition keywords, SEO-optimized content generation, and gap analysis for smarter competitor insights.

Never get writer's block again, humanize AI-written text, create flashcards and notes from any file/live recording, chat with any file, and more.

Nail Designs AI takes the stress out of choosing nail designs at the nail salon. Simply describe your nail art idea—any shape, color, or style—and our AI brings it to life in seconds. You can also explore many AI-powered nail designs for free.