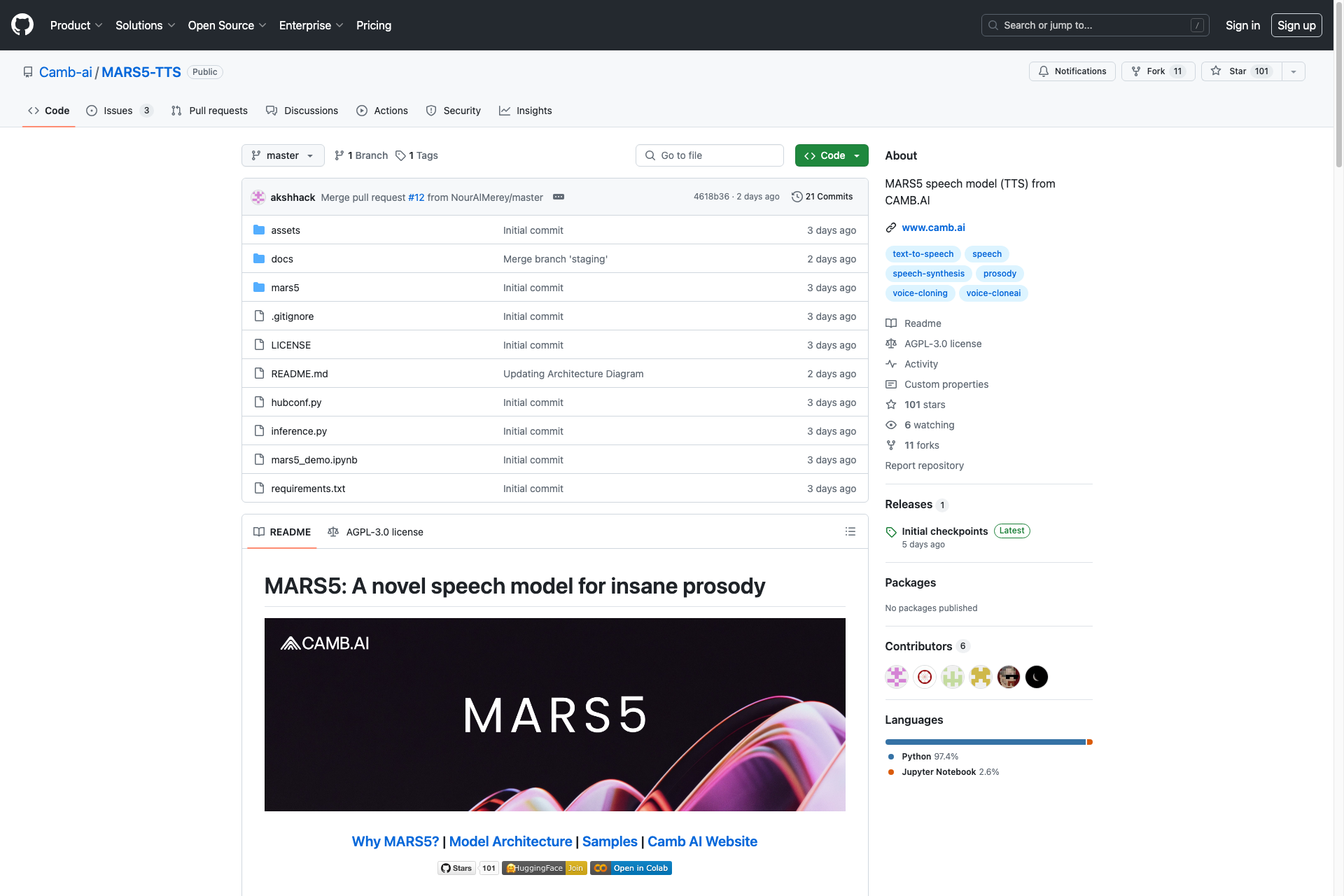

MARS5 TTS

MARS5 an open-source TTS model to replicate performances (from 2-3s of audio reference) in 140+ languages, even for extremely tough prosodic scenarios like sports commentary, movies, anime & more. Join our Discord https://discord.com/invite/ZzsKTAKM today!

Key Features of MARS5 TTS

Two-Stage AR-NAR Pipeline

Utilizes a unique autoregressive-non-autoregressive architecture to enhance speech generation quality.

Natural Prosody Control

Easily guide the prosody of generated speech using punctuation and capitalization in the input text.

Deep Cloning Capability

Achieve high-quality voice cloning by providing a short audio reference and its transcript.

Fast and Shallow Inference

Supports quick synthesis without needing reference transcripts for rapid applications.

Flexible Audio Reference Length

Accepts audio references ranging from 1 to 12 seconds, optimizing performance based on input length.

Easy Integration with Python

Simple installation and usage via pip, making it accessible for developers to implement in their projects.

Frequently Asked Questions about MARS5 TTS

Related Products about MARS5 TTS

Crafts custom erotic stories designed around your fantasies. Select themes, describe acts, choose perspectives, and indulge discreetly.

amara is an AI-powered conversational commerce platform designed to help salons and spas sell more products and book more appointments. With amara Salons and Spas and automate product sales and appointment booking on WhatsApp and other messaging apps.

AI + Email = Productivity. CXassist can automatically manage your inbox for you, intelligently answering emails based on the training data you provide (PDF,txt,csv) giving you hours of productivity back, and providing limitless scale to your business.

Discover Options Elevated, the AI-powered search engine that tailors product recommendations to your needs in under 60 seconds. Enter a query, answer a few questions, and get precise results. Find exactly what you need, effortlessly! 🚀

Chatgpt italiano è un chatbot di intelligenza artificiale che utilizza i modelli linguistici ChatGPT-3.5