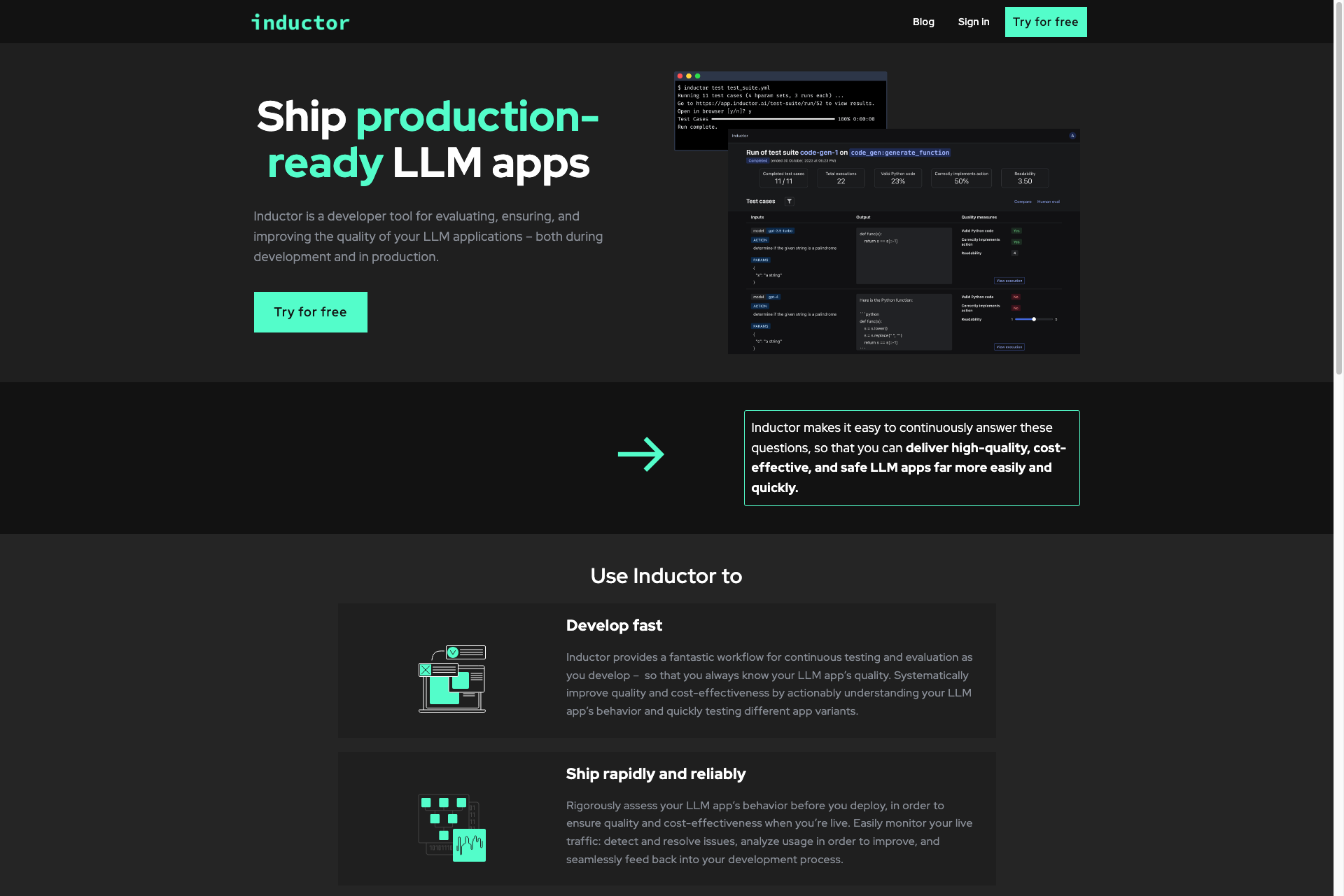

Inductor

Inductor is a developer tool for evaluating, ensuring, and improving the quality of your LLM applications – both during development and in production.

Related Products about Inductor

Brewed allows you to build any web component with AI, from a dropdown, all the way to full landing pages. It's a moonshot idea to someday achieve web development entirely with the help of AI.

Explore AI's future with GPT-S Navigator, your access to OpenAI's top GPT-S models. This ultimate data product offers elite insights, a vast prompt repository, and personalized recommendations to enhance your GPT-S journey. Excel in AI with GPT-S Navigator!

A newsletter focusing on Ai Art and Animation news, tools, prompts, and more. Join over 2000 like-minded Ai creatives and take your AI art, animations, and videos to the next level..

Ogma is an interpretable, continuously learning, symbolic General Problem-Solving Model (GPSM). Ogma is based on symbolic sequence modelling paradigm for solving tasks that require reliability, complex decomposition, without hallucinations.

ad:personam Self Serve DSP elevates your advertising with AI. Effortlessly launch, manage, and optimize programmatic advertising campaigns using advanced tools like Insights CoPilot and AI Assistant Planner. No minimum ad spend required.

STOCK ANALYSIS AS SIMPLE AS ASKING FINK AI Fink AI Beta has been trained to provide financial analysis and conclusions based on StockFink's predictive algorithms and historical data.

Your comprehensive online resource for the latest in renewable energy technology and construction details. Find specialized companies and expand your knowledge.