Composable Prompts

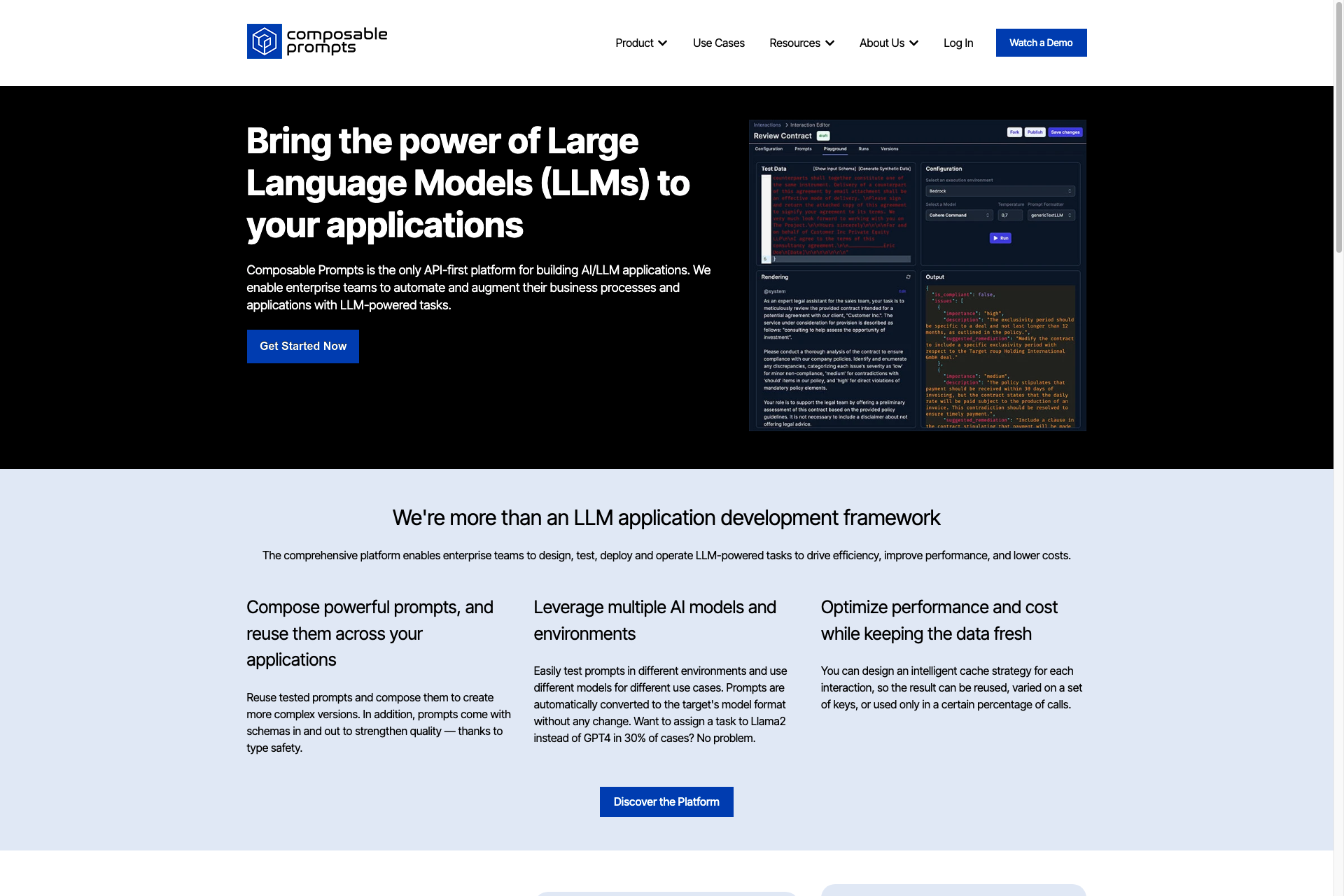

Composable Prompts facilitates rapid API development atop LLMs to power applications. Bring composition, templating, testing, orchestration, caching, load-balancing, and visibility to the world of LLMs.

Related Products about Composable Prompts

Simply and quickly create the voice of a celebrity through the celebrity AI voice generator, anyone's voice can be created.

Aixplora: A customizable chatbot widget, easy to integrate via script tags or WordPress. Offers humor and varied personalities, powered by ChatGPT. Add your own knowledge for tailored interactions on any website.

Your Fast, Free, AI-Powered Toxic Content Detector. With HateHoundAPI, you can now swiftly identify and filter out toxic content in your web applications. Powered by state-of-the-art AI technology.

MixPix AI is a groundbreaking platform transforming how fans interact with their favorite influencers. MixPix AI bridges the gap between the virtual and the real, offering followers, fans, and others the extraordinary ability to generate photos alongside AI

Article Factory is a powerful web application tailored for editing news, blogs, and articles. Harnessing the capabilities of Generative Artificial Intelligence, it effortlessly generates unique, high-quality content, ensuring engaging and original output.

TemPolor, the AI Music Maestro in your pocket! Unleash your inner Mozart with our cutting-edge AI music product. TemPolor transforms your inputs into personalized music, creating a symphony of sound that is uniquely yours.