ChatTTS

ChatTTS is a voice generation model on GitHub at 2noise/chattts,Chat TTS is specifically designed for conversational scenarios. It is ideal for applications such as dialogue tasks for large language model assistants, as well as conversational audio and video introductions. The model supports both Chinese and English, demonstrating high quality and naturalness in speech synthesis. This level of performance is achieved through training on approximately 100,000 hours of Chinese and English data. Additionally, the project team plans to open-source a basic model trained with 40,000 hours of data, which will aid the academic and developer communities in further research and development.

Related Products about ChatTTS

WhisperUI is a text to speech and speech to text service powered by OpenAI Whisper API. With WhisperUI you can use your OpenAI api keys to get affordable text to speech and speech to text services.

join the daily work sesh @ freshman.dev/today-greeter to get started! i've used /greeter every day for 3 months now (ever since i built it). i made it for myself - i used to log what i did each day in a plain text file. this is kinda better. NEW: opt-in AI suggestions. 3 specific things to do with you friends next - based on your actual hangouts!

Recently my friend unloaded emotionally on me, then said "I just needed to vent." I thought, "Yeah, me too." Then I thought, "Maybe others do too. What if..." What if we there was a quick chatbot designed for venting? Let's find out.

AI/ML API revolutionizes tech by offering developers access to over 100 AI models via a single API, ensuring round-the-clock innovation. Offering GPT-4 level performance at 80% lower costs, and seamless OpenAI compatibility for easy transitions.

MONET is an AI-powered data analysis tool for non-data experts. Ask questions from your data and quickly get deep insights & visuals with zero prep. The beta version of the product accepts data with a time column but will eventually accept more types of data.

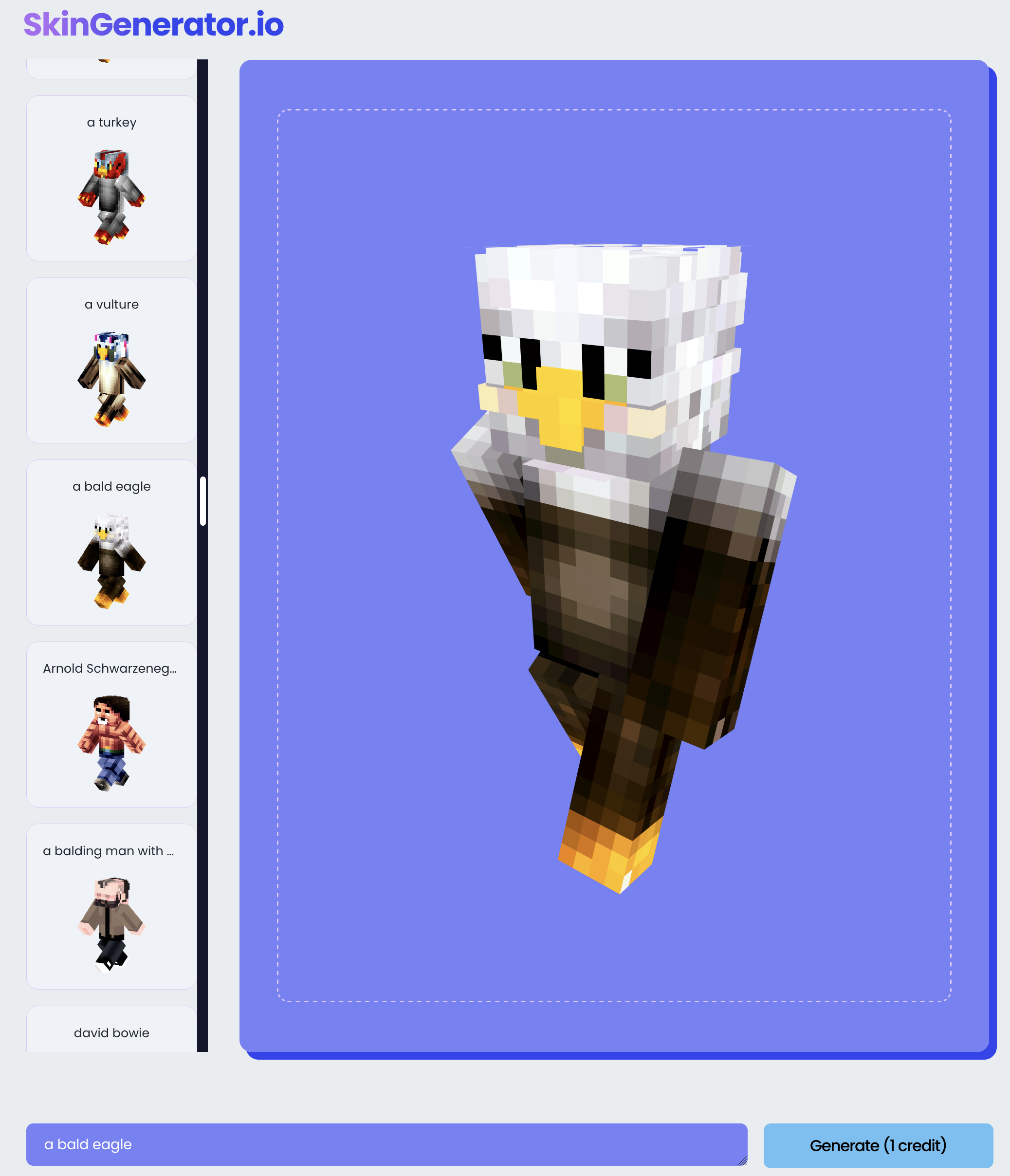

The Minecraft Skin Generator uses a custom fine-tuned Stable Diffusion model to generate usable Minecraft Skins from a text prompt.

Tell Me, Inge… is a cross-platform VR/XR experience that enables users to talk to Holocaust survivor Inge Auerbacher, a child survivor of the Theresienstadt ghetto. Through conversational AI, users are immersed in Inge's stories and hand-drawn 3D animations.